This article is divided into two parts. If I may be blunt, the first part is for people who are not fully convinced that the Word of God is true, and are fearful of things of this world, despite what the promises of God offer. The second part speaks of our dominion over the elements of this world and freedom from fear, and supersedes the first part. The reason that I include the first part is because I have expert knowledge about it, and can settle the matter even from a godless, secular perspective.

Wondering about 5G? This article still applies. See my additional notes here.

In this part I am highly qualified to speak to the issue, because I am a college educated, degreed, career electrical/computer engineer (early-retired), with over 30 years of active, professional experience in the industry. Although my specialty was in the area of computer/digital/datacomms electronic hardware design and development, for at least the last half of my career I had to also deal with electromagnetic phenomena in a design/development/testing capacity, and became somewhat of a go-to person among many of my colleagues for many issues of that nature, as I became more and more competent concerning them. As the speeds of digital electronics increased, we dealt more and more with electromagnetic effects in the design of the electronic circuit boards, the cabling, enclosures, and interconnect, even though the end product designs were fundamentally digital in nature. Government regulatory testing also mandated that electromagnetic emissions and susceptibility be kept within strict limits; testing at accredited EMC test sites was required, and I actively participated in all of this.

With that as a preface, I will now endeavor to explain the simple nature of electromagnetic phenomena in simple terms, and then use it to explain the effects of devices like microwave ovens, WiFi, and other such things. My writing assumes an audience with a high school level education.

Please, will those of you folks who shrink back at the thought of contemplating anything scientific or mathematical please give me an opportunity to bridge the gap with you? I have tried very hard to simplify everything in this article.

Most are familiar with the effects of electric and magnetic fields. "Static," which is a static electric charge, causes clothes to stick together coming out of the dryer, and other things. Permanent magnets are used to stick things to the door of your refrigerator. The static electric charge is not spooky, and neither is the magnet.

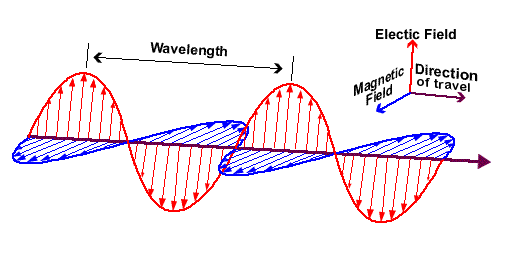

A varying electric field produces a magnetic field perpendicular to it, and a varying magnetic field produces an electric field perpendicular to it. These propagate through space analogous to how a sound wave does from its source, or ripples in a pond when you throw a rock into it.

Here is a diagram of a traveling electromagnetic wave (click here or on the picture for an animation). Again, remember that there is nothing spooky about the electric field or the magnetic field. I will qualify that later.

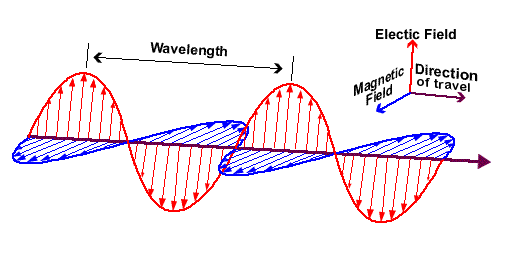

At the low end of the chart are "radio" waves. For example, an AM broadcast radio station operates at some fixed frequency of transmission, from about 550,000 cycles per second (550 KHz) to about 1,600,000 cycles per second (1.6 MHz). An FM broadcast radio station would be at some frequency from about 88,000,000 (88 MHz) to 108,000,000 cycles per second (108 MHz).

Next is "microwave," which a microwave oven, WiFi, cell phones, and many other devices operate at. A microwave oven generates electromagnetic waves that oscillate at a frequency of 2,450,000,000 cycles per second (2.45 GHz).

"Micro-wave" is now somewhat of a misnomer, by the way. The length of a single "wave" of the "micro-wave" is about 5 inches, which is hardly a "micro" anything, from a human perspective, although it might have seemed so to Maxwell and Hertz in the late 19th century.

Next is "infrared," which is below the visible light spectrum, which we cannot see, but a snake can, but which we can feel as radiant heat on our bodies. Infrared radiation spans a frequency range of roughly 300,000,000,000 cycles per second (300 Gigahertz) to 400,000,000,000,000 cycles per second (400 Terahertz).

Next is "visible light," which we can see. Each color hue corresponds to a particular frequency. It ranges from 430,000,000,000,000 cycles per second (430 Terahertz) for the color red, to 730,000,000,000,000 cycles per second (730 Terahertz) for the color violet.

Ultraviolet light, which we cannot see, but a butterfly can, is immediately above the visible light range, then X-rays, which your dentists and doctors use, and gamma rays above that.

The frequency range is also assigned to a scale of "energy," measured in electron volts. This is also easy to understand, since the same thing moving faster (oscillating faster, in this case) has more energy. If you throw a bullet at a person using your hand, it is doubtful that it will hurt him, but if you shoot that same bullet at a person out of a gun, it will most certainly hurt him, the only difference being the speed of the bullet. That might not be the best analogy, since the bullet does not oscillate (think of the journey of the bullet as one half an oscillation), so perhaps a jack-hammer or an engraver might fit the illustration better. Or perhaps just think of how you would take a dirty floor mat outside and shake the dirt off of it. The faster you shake it back and forth, the more it ejects the dirt. But if you just wave it slowly back and forth, very little dirt will be ejected from the floor mat.

Yes, higher frequencies are more energetic, and potentially more damaging. But notice that visible light, for example, is roughly 200,000 times the frequency and that much more energetic than microwave radiation. The length of a "wave" of light is about 0.000025 inches (25 millionths of an inch), which is microscopic in size. Why are some people afraid of microwave radiation, which has a wavelength of about 5 inches, as being something "spooky," but not visible light, which has a microscopic-size wavelength?

Also, note that since the creation we have been bombarded by cosmic radiation of nearly all frequencies, mostly generated by the sun.

The microwave oven is a very simple appliance and is not spooky at all. The way it works is also very simple to understand.

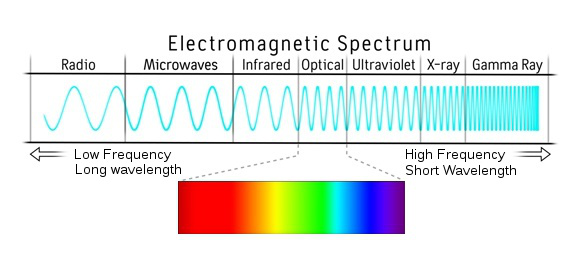

Look at the following picture of two water molecules:

Of course, most everyone knows a water molecule, "H2O," has one oxygen and two hydrogen atoms. Notice the alignment of the three atoms in each water molecule. The diagram is not arbitrary; the angle formed by the two hydrogen atoms is 104.5 degrees. There is a polar, static electric charge, where one side of the molecule is more negative, and one side is more positive. Of course, with magnets as well as with electrically charged things, unlike charges attract, and like charges repel. Most people have played with magnets, so they know this.

If you pass an electromagnetic wave through water, then the molecules will all physically move to try to align themselves with the electromagnetic wave, because like charges repel, and unlike charges attract. Since each molecule moves in the same direction on its own accord, independent of molecules near it, they rub against each other, so to speak, as they move to line up with the electric field. This friction causes heat.

Now, rub your two hands quickly and rapidly together. Do it now. Rub them harder. Faster. Did your skin get hot? Why is that? One hand was not hotter than the other hand, and there was no external source of heat. They got hot due to simple friction of the molecules of one hand rubbing against the molecules of the other hand. If you slid down a rope, holding it with your bare hands, you would generate enough heat to cause a "rope burn." The rope was not hot, but it heated your hands due to friction. All heat is, is just kinetic (motion) energy of molecules within a substance anyway.

This is how a microwave oven works, by physically moving the molecules back and forth, generating frictional heat. Are you disappointed? Is it really that simple? Yes, it is that simple. It is not spooky at all. The microwave radiation generates heat within the food without being hot itself, and the food is heated without something already hot heating it, unlike how we normally heat food.

Inside the metal box of your microwave oven, a device called a magnetron generates about 1000 Watts of electromagnetic radiation, which the metal box confines, and as it passes into the food, the water, fat, and other polar molecules get hot due to simple friction of the molecules physically rubbing against each other, so to speak. It is not spooky at all. (Click here or on the image below for a video explaining more).

By now your hands that you had been rubbing together aren't hot anymore. The heat that you had felt quickly went away. That is because you were only rubbing the skin at the surface. Then, when you stopped, the surface heat was quickly absorbed by the inside of your hands, which was not heated. But what if you could "rub" all the molecules deeper down into your hand at the same time? That's what a microwave oven does. The microwaves penetrate something like a half inch to a couple of inches into the food (the power diminishes with depth as it is absorbed by the food), causing friction in all that at once.

When the magnetron turns off, the electromagnetic radiation stops, and nothing persists of it, except the heat of the food which had been heated. Nothing else in the food is altered, except that which is caused by simple heat from friction.

Now aren't you relieved to know how microwave ovens work, and that nothing spooky remains in the food after you take the food out?

I will now state something surprising. From a human health and nutritional point of view, the microwave oven is the safest and healthiest way to heat and cook food.

The reason for this is simple as well. If you want to retain the nutrients of food, you want to heat them for the shortest time possible, and only heat them. If you boil food in a pot, some nutrients will leech out into the water, which you will then dump down the drain. Also, as the length of cooking time increases, so do the chemical changes inside the food, as it becomes less and less "raw." What is worse, when you bake, deep fry, or grill food, the outside of the food is actually burned. We like the taste of a little of that brown, burned flavor, but it is still burned, representing the greatest chemical alteration of the food, and the greatest decrease in nutritional value. The worst way to cook food, from a health and nutrition point of view, is to charcoal grill it, for the same reason. (But it sure is yummy!)

Microwave heating of food is rapid and efficient, and the food can be consumed more near to its raw, healthy state than any other kind of cooking will allow.

The problems with microwave cooking have to do with several things. There is a constant issue of uneven cooking (this is the reason for the internal "stirring" fan and turntables and instructions for the user to keep rotating the food), due to the difficulty of distributing the microwave radiation evenly inside the oven cavity. Then, since there is no "browning," that nice (albeit not healthy) flavor that you get, such as with the crust of baked bread, is absent. Yuck! Who would want to eat bread that did not have a crust? Decreased cooking time means less time for spices and things to flavor through in a baked dish. So, we tend to use the microwave oven more for re-heating prepared food than for cooking it, because of the taste. And then there are secondary problems, such as cooking in plastic containers, which you would never dream of putting in a conventional oven, and which do not actually get hot by microwaving, but the food can get hot enough to melt the plastic, and then you end up eating a bit of the burnt plastic that melted into the food.

If I was motivated to do so, I could come up with some scary, but biologically, nutritionally valid explanations about how bad the chemically altered food from conventional cooking that browns and burns the food is for you, including chemical carcinogens created in the process, which the microwaved food does not have. But I won't go there. I only mention it in passing to bring to light the hypocrisy of the anti-microwave agenda.

But overall, you can see that microwave cooking is not so mystical or spooky at all. Nor is it foundationally "unsafe" or "unhealthy" -- quite the opposite. Again, just rub your two hands together rapidly until your skin warms up. Are you afraid of that?

If you browse the internet, where you can find people claiming just about anything and pretending that it is authoritative, you will find articles about the "horrors" of what microwave cooking does to food. Everything that they explain, like "cells exploding," "proteins unraveling," and so on, is what happens to any food that is heated to a high temperature. That is what all cooking does, and this helps with digestion; your body will finish the job, and "unravel" all proteins back to their original amino acid forms, so that your body can build human proteins out of them. And if you put a living animal in a microwave oven, it will surely die, because it will be burned to death, its living cells "exploding" and its living proteins unraveling, and so on. But since all cooking does this, simply due to the effects of heat, this makes such scary-tales about microwave ovens a half-truth, which is a lie.

Then they make up caricatures such as this:

One man, William P. Kopp, even claimed that the microwave oven was "banned in Russia" in 1976, and his paper has been circulated widely. To date, no one has been able to find record of any such law in Russia, or any revocation during Perestroika, or any such announcement at any international conference, or any other evidence that this ever happened, including any Soviet-era communist or anti-communist propaganda that you might expect from it, or any Russian who could vouch for it ever being so. A Russian friend of mine pointed out that the communists of that era cared little to nothing for the people's health and welfare in the first place, but only their own political agenda, so it would be ridiculous that they would ever make such a law. So the one man, Kopp, was either making up the story or operating on hearsay, plain and simple.

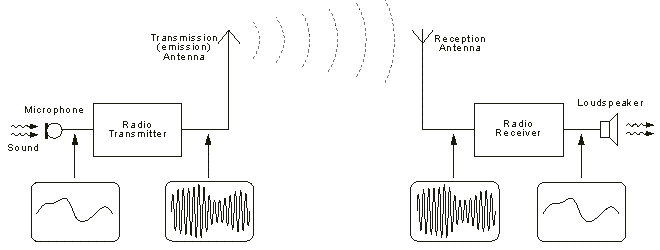

When you pass an alternating electric current through a wire, it becomes an antenna, and electromagnetic waves propagate out from that antenna. The reverse is also true: When an alternating electromagnetic wave passes through a wire, it acts as an antenna, producing an electric current (click on the picture below for an animation) that can be amplified by an electric circuit. If the wave is modulated with information at its source, the information can be extracted at its destination. This is how all radio works.

As far as the intensity of the electromagnetic field goes, it is important to know how much power is generated by the source, and how far you are from that source.

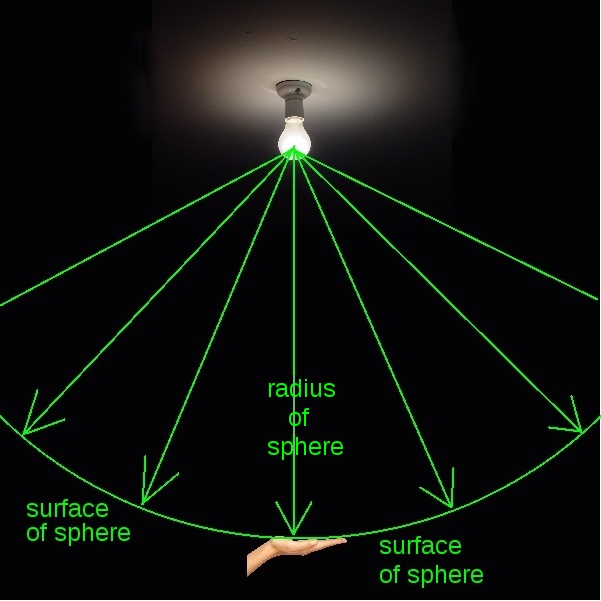

As an analogy and object lesson, find a 100 Watt incandescent light bulb that is on, and hold your hand very close to it. Most of this is electromagnetic radiation in the infrared and visible light range. If you hold your hand too close to the bulb for too long, it can burn your skin. Now move your hand twice as far from the bulb. That heat will be one fourth of what it was. Move your hand twice as far again as what it just was. That heat will again be another fourth of what it just was (one sixteenth of what it originally was). Every time you double the distance, the effect will be one fourth, every time you increase the distance by ten, the effect will be one one-hundredth, and so on.

The amount of radiation received is inversely proportional to the square of the distance (assuming there is no object in the way that is blocking it, which will reduce it, and nothing else not in the way that might reflect some additional radition back to you, obviously). The formula is simply from that of the surface area of a sphere as the radius (the distance from the center to the surface) increases: 4πr2. Remember your high school geometry? Imagine the source of radiation, and the increasing size of a sphere surrounding it, as the distance (radius) increases. The original power is distributed evenly, and thus decreases proportionally, over the increasing surface area of an imaginary sphere. The amount of the original radiated power that you can grab with your hand decreases with distance by that amount.

I'll take the liberty of estimating the surface area of a person's hand at roughly 20 square inches. If you hold your hand 40 inches (3.5 feet) away from the center of a 100 Watt light bulb, then we can calculate how much of the "100 Watts" of radiation your hand will be exposed to at that distance. (This is assuming the whole 100 Watts is radiated, which I realize it is not, and assuming that you can capture and absorb all of what you receive, which you can't, and this will also apply to my other examples of microwave and other radiation that follow. All my illustrations and calculations are therefore pessimistic and worst-case, not optimistic and hopeful.) The surface area of a sphere with a radius of 40 inches is about 4 * π * (40in.)2 = roughly 20000 square inches. Therefore, your hand will be subject to 100 Watts * 20in.2/20000in.2 = 0.1 Watts, about one thousanth of the original radiated power.

Now go to the other end of the room, or even out the door and into another room, if you can still see the light bulb from there. Put your hand up. Imagine a sphere with center at the 100 Watt bulb and surface at your hand, and the 100 Watts distributed around the surface of that huge sphere, diluting the radiated power by that same factor. How much of the surface of that sphere does your hand represent out of the total area? How able is the 100 Watt bulb to burn you now, even if you stood there all day long? It is now negligible, and silly to talk about. Nobody would claim that the 100 Watt bulb would have any effect on the human body at any significant distance.

This is how electromagnetic radiation works as it spreads out from its source. It requires the design of very sensitive electronics to pick up the miniscule radio signals that cause miniscule currents to flow in a receiving antenna at any significant distance from the source. It was a feat of 20th century electrical engineering to design an apparatus that would detect, select, and amplify those almost-negligible electric currents, which otherwise caused no effect on anything at all, and were not even previously detectable.

And remember, visible light has on the order of 200,000 times the energy of microwave radiation in electron volts, by comparison.

Visible light is not "ionizing radiation," much less is microwave radiation, which is on the order of 200,000 times less energetic than light. Ionizing radiation starts in the ultraviolet range and the "ionization," or "nuclear" effects increase with frequencies above that. "Ionizing radiation" is fundamentally able to cause persistent changes to the material that it passes through, at a molecular level. Not changes due to frictional heat, but actual chemical and nuclear changes caused by the radiation itself. If you lie out in the sun for too long, the ultraviolet radiation can cause a "sunburn," which is not a heat burn, but the effect of chemically altering the skin so that it is damaged. A sunburn is not a "heat" burn, since it does not "burn" on the spot, although the latent effects and symptoms are similar; rather, the damage and destruction of tissue in the skin becomes apparent (and painful!) after a few hours. Devices that generate ionizing radiation in frequencies above that are heavily regulated, since the effects increase with frequency. You might be able to buy an ultraviolot colored "black" light bulb in a consumer retail store, and maybe even a sun-tanning lamp, but not an X-ray machine.

A microwave oven is allowed to leak no more than 0.005 Watts of power per square centimeter anywhere at 2 inches from the surface of the appliance.

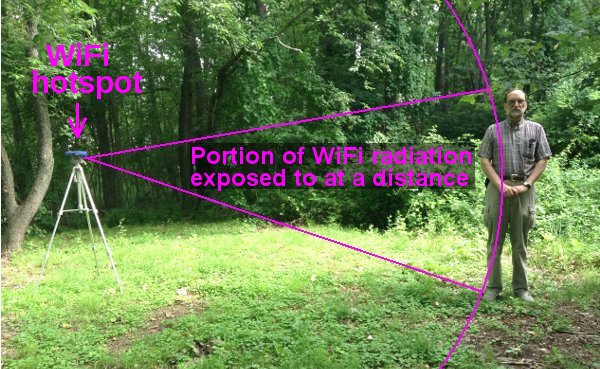

A WiFi hotspot can generate up to 0.1 Watts at the same frequency. If you cup both of your hands over the antenna, you might be able to be exposed to all of that 0.1 Watts. (Note: The WiFi hotspot in the picture below has two antennas. I have my hands over one of them.)

But, at any significant distance, the previous illustration of the surface area of a sphere applies.

So, now suppose you are 10 feet away from the WiFi hotspot. The 0.1 Watts will be distributed over a surface area of 4 * π * (10ft.)2 = 1260 square feet. A full grown adult might have a body surface area (planar) of perhaps 10 square feet (https://www.medcalc.com/body.html). Therefore, he is subject to 0.1 Watts * 10ft.2/1260ft.2 = 0.0008 Watts of that radiated power at that distance. If he is 30 feet away, then that would reduce to about 0.0001 Watts (i.e. a thousanth of the power that he could be exposed to by cupping his hands around the antenna of the Wi-Fi device). And so on.

To further illustrate the effect, let's calculate how much power that whole "10 square foot" person receives from a 100 Watt light bulb, 100 feet away (assuming no walls). How able is the light bulb to heat that body, much less burn it? The area of the sphere is now about 4 * π * (100ft.)2 = 125,000 square feet. 100 Watts * 10ft.2/125,000ft.2 = 0.008 Watts.

Now, did you catch that this "0.008 Watts" from the 100 Watt light bulb, 100 feet away, is ten times as powerful as the "0.0008 Watts" that you would get, 10 feet away, from the 0.1 Watt WiFi hotspot?

A cell phone can generate up to 2 Watts at frequencies typically somewhat below that of a WiFi hotspot, but in the same ballpark (the range is 0.7 to 2.6 GHz). If you put the cell phone to your ear, you might get up to 1 Watt going into your body, and 1 Watt would go in the other direction away from your body.

Obviously the cell phone, which needs to communicate all the way to the nearest cell tower, which is a long way away, is orders of magnitude more powerful than either the WiFi or the leakage from the microwave oven. It is so immensely greater that no one who uses a cell phone should even consider the radiation produced by the microwave oven or the WiFi. Remember the illustration of the power of a 100 Watt incandescent light bulb at a distance, compared to up close.

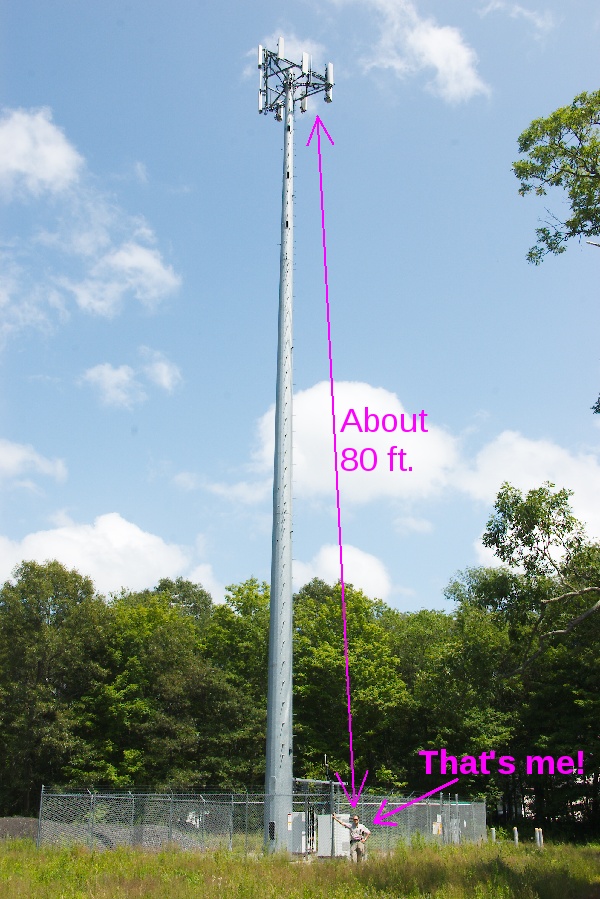

Nor should one be concerned about the cell phone tower. The FCC in the U.S. permits a transmitted power of up to 500 Watts per channel, but in practice the antennas radiate around 5-10 Watts, because of the inefficiency of the antenna (https://www.fcc.gov/guides/human-exposure-rf-fields-guidelines-cellular-and-pcs-sites). That's at the transmitting antenna.

Assuming that a cell phone tower antenna does radiate 10 Watts into the air, let's do the math again, assuming you are just 100 feet from the antenna of the cell phone tower. (If you look at the picture below, that's usually about as close as you can get to a cell tower antenna, anyway.) Approximately, 4 * π * (100ft.)2 = 125000 square feet. Now the "10 square foot" person is exposed to 10 Watts * 10ft.2/125000ft.2 = 0.0008 Watts of that radiated power. Compare this with the 0.1 Watts at the WiFi access point or 2 Watts from the cell phone pressed up against your head. (Note: I realize that there is more than one antenna in a cell phone tower, and more than one "channel" transmitted. Again, I am just citing a crude measure.)

Now, did you catch that this "0.0008 Watts" from the 10 Watt cell phone tower, 100 feet away, is one tenth as powerful as the "0.008 Watts" from the 100 Watt light bulb, 100 feet away?

More likely, the cell tower is a mile away. 4 * π * (5280ft.)2 = 350,000,000 square feet, and 10 Watts * 10ft.2/350,000,000ft.2 = 0.00000003 Watts of exposure to the "10 square foot" person. Compare this with the 0.1 Watts at the WiFi access point or 2 Watts from the cell phone pressed up against your head.

Some people look at pictures like the above and think that the "bigness" of the cell tower means a "powerful" cell tower. Some even think that the whole cell tower is the antenna. In reality, the antennas are inside the little rectanglular enclosures mounted at the top of the tower. The reason the cell tower is "big" and "tall" is to provide a sturdy, high mount for the antennas. In the UHF and microwave radio frequencies, antenna height is more important than radiated power, because at those frequencies the electromagnetic radiation is largely absorbed and blocked by a great variety of things in the way between the transmitter and the receiver. Again, I will highlight the point that all my calculations for exposed radiated power are pessimistic; in this case the calculations assume that you have a clear, line-of-sight view of the transmitting antenna!

People concerned with cell phone radiation often oppose the construction of cell phone towers near them. This actually has the opposite effect of what they suppose. The reason for this is that, whereas the power from a cell phone tower is miniscule at any significant distance, the electromagnetic power generated and transmitted by your cell phone, the one that you are holding right up against your head, will be throttled down to a lower level to reduce the drain on the cell phone battery, if it senses that it can reach the cell phone tower at a lower power output level because it is nearer to the cell tower. Therefore, to reduce human exposure to cell phone radiation, you want more and closer cell towers. Maybe you want to have them put one in your backyard now, if you want to reduce your exposure to cell phone radiation!

Recently I was sitting in a swimming pool patio of a hotel in Kenya, and I noticed a cell tower in the next property, one that was serving my cell phone. Here is a photograph that I took from where I was sitting:

Using Google Maps, I captured this image showing an aerial view:

You can also access this on Google Maps at this link (GPS coordinates 0.47707° latitude, 35.26202° longitude). Unfortunately, at the time of this writing it seems the satellite photograph is too old to show the cell tower, so I had to estimate about where it was (red arrow), but we are dealing in rough approximations anyway, so that is okay.

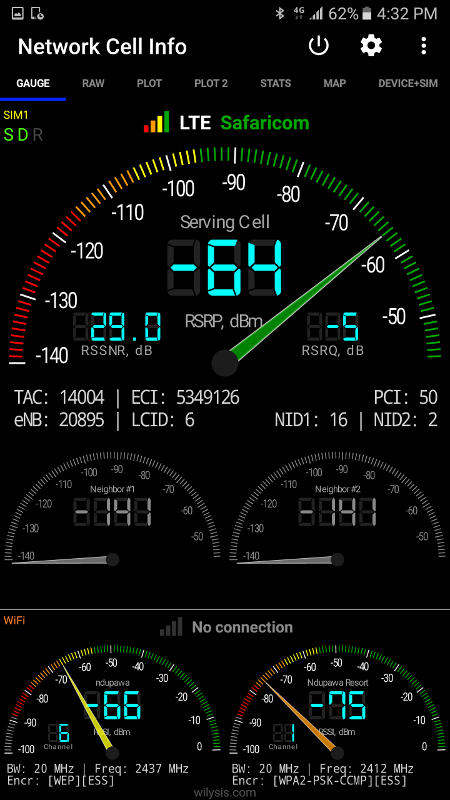

Next, I took readings of the signal strength, as seen by my smartphone, of both the cell tower signal and the hotel's WiFi hotspot, which is located in the building behind me in the patio area. Here is the screenshot of that:

The top gauge shows the cellular signal, and the bottom-left gauge shows the WiFi signal. You will notice that they are approximately the same. Order of magnitude calculations show that this makes sense: If the cell tower were putting out 10 Watts, and the WiFi hotspot 0.1 Watts, then this is a hundredfold difference. If the cell tower is a few hundred feet away, and the WiFi hotspot a few tens of feet away, then this is a tenfold difference. This is consistent with the inverse-square law, corresponding to the expanding area of a sphere as a function of its radius, as I have been repeatedly citing. This shows that if you have a cell tower next door to your property, the power from it at that distance will be about the same as from a WiFi hotspot in your house. Again, neither is significant compared to the "2 Watt" transmitting power of your cell phone, the one you press up against your body.

Then, there will inevitably come the objection that the WiFi or cell tower is exposing you around the clock, but the cell phone only during a call. Let's do the math on that, because it is easy. Suppose you live 30 feet away from your WiFi hotspot all day and night long. From the previous calculation, you might be exposed to 0.0001 Watts. If you assume that 1 Watt of the cell phone goes into your body (and the other 1 Watt away from it), then the amount of time per day that you would have to be on a cell phone call to equal that of the WiFi would be 24 hours * 0.0001/1 = 0.0024 hours = 9 seconds a day. In other words, the cell phone radiation against your body is about 10,000 times greater than the WiFi, 30 feet away from your body, so if you used the cell phone more than 9 seconds a day, you would exceed the cumulative exposure to the WiFi, if you positioned yourself 30 feet away from it all day and night long. This calculation doesn't even include the periodic communication that goes on in the background between the cell phone and the cell towers when you are not making a call, which is part of the obligatory administrative protocol between the cell phone, the cellular network, and the service provider. This becomes more frequent especially when driving, as responsibility for communicating with your cell phone is handed off from cell tower to cell tower as you move out of range of one and in range of other one.

And what of the 1,000,000 Watt commercial radio and TV broadcast stations that have been broadcasting for over a half a century or more? Where was the outcry about that for the last half a century, and even now?

Yet, no other common radio device compares to a 2 Watt cell phone pressed up against your body. Cordless telephones are only a small fraction of that power, because they only have to reach their base station inside the same house. WiFi only has to reach the devices in your house; two houses away, you cannot even connect to it. Whoever heard of connecting to your friend's Wi-Fi hotspot from your house, when he lives across town? Other household wireless computer devices and other devices are of similar, miniscule power.

Keep in mind that the "2 Watt cell phone" is not transmitting all the time, or necessarily at the full "2 Watts" all day long! If it were, then your phone's battery charge wouldn't last all day even idle in your pocket; it would be drained in a few hours just by the transmitter. Modern smartphones have batteries ranging in size from 3000 milliamp-hours (mAh) to 5000 milliamp-hours (mAh). Watts = Volts * Amps. For a typical small smartphone, 3000 mAh * 3.7 Volts / 2 Watts = 5.55 hours. For a typical large smartphone, 5000 mAh * 3.7 Volts / 2 Watts = 9.25 hours. That would be just the drain from the RF output of the transmitter, not including the rest of the electronics (including processor, memory, etc.) that are draining the battery due to background tasks. Again, to conserve battery, the phone transmits at only enough output power to reach the cell tower, and then it only turns on the transmitter ever so briefly when idle, only long enough to ping the cell tower and establish its presence with very short, digitally-encoded messages. "Zit-zit....zit-zit....zit-zit" -- if you have ever heard that interference on an AM radio or some other electronic device with a speaker that is susceptible to interference, each little "zit" is how long the transmitter is actually on, hardly at all over the expanse of time that it is idle in your pocket.

Also, since the mid-twentieth century, all kinds of people have been using two-way radios attached to their belts for communications, such as police, fire, security, construction workers, and so on. These radios vary typically in the half-watt to ten watt power output range. Where was the outcry and concern about that over the decades? Some may object that these are typically in a lower frequency band (VHF/UHF) but, then again, infrared and visible light is electromagnetic radiation at a higher frequency band. If electromagnetic radiation in the microwave frequency band is somehow more spooky, then shouldn't infrared radiation and visible light be many times over more spooky? Remember in the 1960's that Kenner Easy-Bake Oven for aspiring girl cooks that used two 100-Watt bulbs as a source of heat? How powerful were these toys? Powerful enough to bake a small cake or brownies. And since, again, the infrared and visible light from the 100-Watt light bulbs is up to on the order of 200,000 times the frequency and that much more energetic than the microwaves from a microwave oven, where is the outcry about potential spooky health effects from a Kenner Easy-Bake Oven, sold at a toy store for children to use! Whereas a microwave oven is using electromagnetic radiation at a frequency of 2.54 GHz, the food in the Kenner Easy-Bake Oven is being exposed to electromagnetic radiation at frequencies of up to 750,000 GHz!

Yet the question remains whether a 2 Watt cell phone could have any adverse health effects from a purely biological standpoint. There are conflicting reports in the scientific literature about this, and in Part II I will offer an explanation that is entirely spiritual, and not physical. But before we get there, you must be wary of internet websites and other agenda-based platforms that only select the scientific studies favorable to their position, rejecting any that are unfavorable, even if those unfavorable constitute the majority. If you want to approach the subject from an educated, rational, intelligent, scientific perspective, then you must adhere to the scientific method, which assesses all the data, and not just that which is favorable to a particular agenda.

Although my field of expertise is not biology, much less the fields of medical research, I can still apply a fair amount of common sense reasoning to the physical phenomena as it applies to the human body.

A 1000 Watt microwave oven would be very dangerous to human health if it was ever operated with the door open and a person near it. The immediate danger would be in causing physical burns, especially since the microwaves immediately penetrate anywhere from a half inch to a couple of inches into the flesh. In particular, the human eye is very susceptible to thermal damage, as it does not efficiently remove heat, and microwave radiation can easily penetrate an inch or so into the eye. But who has ever heard of a microwave oven door interlock failure (resulting in the magnetron remaining on when the door was opened)?

By comparison, the heat from a conventional oven does not penetrate at all immediately. It relies on conduction, i.e. the burner heats air and the cavity of the oven, and the air between the oven cavity and food only heats the outer surface of the food, which heats the inside of the food, and all that quite slowly, since air is an insulator, not a good conductor of heat.

Now, how many people have heard of someone burned by touching the inside surface or racks of a conventional oven, or a flaming stovetop burner, or a charcoal grill? Probably everyone. The way you get burned in connection with a microwave oven is by touching the heated food, or a food dish that was heated by the food until it, too, was hot enough to burn you.

In general a "Watt" is a "Watt," a practical, standard, scientific and engineering measure of power, and a 2 Watt cell phone shouldn't be expected to have any more "burning" power than a 2 Watt light bulb or heating element, particularly since the 2 Watts is spread out in all directions. By contrast, a 100 Watt incandescent light bulb does get hot enough to burn, but not instantly, unless you touch the glass of the bulb, which has been storing the heat from the 100 Watt filament for long time. On the other hand, a 1500 Watt hair dryer or a 1500 Watt space heater can easily burn if you are not careful with it.

A laser pointer puts out 0.005 Watts of power in pure light, in a dot about 0.1 inch in diameter, similar to the intensity of the midday sun on the same 0.1 inch diameter area, which is why you can't really see the laser pointer if you shine it on something that is in direct sunlight. But there are no known cases of permanent eye damage from laser pointers, especially since people naturally blink and turn their eyes away from either direct sunlight or a laser pointer shining into their eyes. Regardless, this is only an effect on vision, because of the sensitivity of the eye, and its ability to focus that dot into a much smaller dot on the retina of the eye; there is no effect on skin or anything else at such power levels.

By contrast to that, a 2 Watt laser, which a consumer can only obtain on the black market, concentrated into that same 0.1 inch diameter size dot, will burn the skin quite quickly, or the retina of a person's eye before he even has time to blink. But again, that is just heat from the intensity of the light. You could do the same thing using sunlight and a 2-inch diameter magnifying glass, by which you can focus and concentrate the approximately 2 Watts of sunlight over the 2-inch diameter area of the magnifying glass into a small dot that can burn the skin the same way.

So, you see that the laser is not spooky at all, either. It is just a bright light that can be made to stay in a straight beam that does not spread out from its source (or, at least, hardly at all, for a very long distance).

And, what about exposure to direct sunlight? The power of direct sunlight is roughly 1000 Watts per square meter. That means that a 10 square-foot (planar) person sunbathing could receive around 1,000 Watts of sunlight, distributed over the surface of his body!

Obviously, light only penetrates a short distance into the skin before it is all absorbed. If you shine a flashlight through your palm, you can see that there is not much light left that passes through, but just a little bit of a dim, red glow, if even that. On one hand, the light does not affect the whole body. A sunburn is only skin-deep. On the other hand, all that power is absorbed by the skin, whereas the flesh underneath gets almost nothing. Microwave radiation penetrates anywhere from a half inch to two inches into the flesh. On one hand, this means that it can affect all that flesh. On the other hand, its power is more evenly distributed (i.e. diluted) through that whole volume and not concentrated as much on the surface.

Now, I have been citing power distributed over the surface of a person's body. In the the "whole body" examples that I have given, all the power is distributed over the surface area of the "whole body" (that which is facing the source of the radiation). That is why 1000 Watts of sunlight does not burn a sunbather (excluding the effect of the ultraviolet light), but a 1000 Watt microwave oven quickly heats and can burn a small item of food. If the microwave oven door was opened and the 1000 Watts of microwave energy was distributed evenly over "10 square feet" of a human body, it would not get very hot very fast. This should be obvious to anyone accustomed to microwave cooking, and is one reason why it is not practical to cook a whole turkey in a microwave oven, since it will take so very long (among other reasons, of course).

Suppose that you built a microwave oven as big as a room, with all metal walls, powered it with the same 1000 Watt magnetron, and put a 180 pound man like myself in it. Would I even get hot? Suppose a one-eighth slice of a 3 pound pizza takes 30 seconds to heat up in a 1000 Watt microwave oven. I am 180lbs/(3lbs/8) = 480 times the mass of the slice of pizza. In other words, heating my body using a microwave oven would be like heating 480 slices of pizza all at the same time. It would take about 480 * 30 seconds = 4 hours to heat my body to the temperature of the slice of pizza, if I was put in a microwave oven the size of a room. That is so slow that the body would just take care of it using its normal processes of internal temperature regulation. Doing the calculation a different way, the 20 square feet or 2880 square inches of surface area of my body (using the whole surface now, since it will be irradiated from all sides) would be exposed to 1000 Watts / 2880 square inches = 0.35 Watts per square inch. I would expect that I might just feel the warmth in the same manner as I would if I were sunbathing, or from a heat lamp, or a fireplace or wood-burning stove at some distance. On the other hand, since the 0.35 Watts per square inch is diluted over the one-half to two inches deep of flesh that it penetrates, maybe I wouldn't feel anything at all.

The proposition of a human being in a 1000 Watt microwave oven as big as a room may cause many to recoil in fear at the very thought of it. But the warmth that you feel from exposure to direct sunlight is mostly from the infrared radiation, below the visible light range, but far above that of the 2,450,000,000 cycles per second (2.45 Gigahertz) frequency of the radiation from a microwave oven. Both are 100% heating from electromagnetic radiation. No heat from the sun is conducted, because 93 million miles of vacuum separates us from the physical sun. Why is it that the microwave radiation is commonly perceived as "spooky," but not the infrared radiation that you intentionally expose yourself to using sunlight, a heat lamp, a fireplace, or a wood burning stove? Infrared radiation spans a frequency range of roughly 300,000,000,000 cycles per second (300 Gigahertz) to 400,000,000,000,000 cycles per second (400 Terahertz)! And again, although the microwave radiation penetrates more deeply, this also means that the power is distributed more evenly throughout a larger volume (in other words, more diluted), instead of being all absorbed by the living tissues of the skin closer to the surface.

Then, to add to that, the human being in the 1000 Watt microwave oven as big as a room is not exposing himself to ionizing, ultraviolet radiation, such as from the sun, which causes direct, immediate, chemical and molecular changes to living tissue in the skin, and not just thermal heating. The approximate power of the UV radiation is about 4% of the total sunlight, with infrared and visible light comprising the rest (see https://en.wikipedia.org/wiki/Sunlight), so our "10 square foot" man sunbathing might receive something like 40 Watts of ultraviolet radiation over the exposed surface of his body.

We could also make some more relevant comparisons. For example, let us suppose that a cell phone has an antenna that covers an area of one square inch on the back of the cell phone. That means that the "1 Watt" of power will travel through a cross section of flesh that is one square inch at the surface. This is a very crude metric, but I will use it for some more calculations.

How much power will each square-inch cross section of a person's body receive of the power that I previously calculated for the whole body? My "10 square foot" person has 10ft.2 * (12 in./1 ft.)2 = 1440 square inches of cross sectional surface area. The amount of power that each square inch receives is then:

- 1000 Watts / 1440 = 0.7 Watts per square inch for the sunbather

- 1000 Watts / 2880 = 0.35 Watts per square inch for the man in the oversized microwave oven

- 0.008 Watts / 1440 = 0.000005 Watts per square inch for the 100 Watt bulb 100 feet away

- 0.0008 Watts / 1440 = 0.0000005 Watts per square inch for the cell tower 100 feet away

- 0.0008 Watts / 1440 = 0.0000005 Watts per square inch for the WiFi hotspot 10 feet away

- 0.0001 Watts / 1440 = 0.00000007 Watts per square inch for the WiFi hotspot 30 feet away

- 0.00000003 Watts / 1440 = 0.00000000002 Watts per square inch for the cell tower 1 mile away

Here is a table summarizing most of the numbers that I have cited so far:

| Food inside microwave oven | 1000 Watts |

| Person sunbathing | 1000 Watts |

| 100 Watt light bulb (at filament) | 100 Watts |

| Cell tower antenna | 10 Watts |

| 2 Watt laser | 2 Watts (all focused into 0.1 inch diameter dot) |

| 2 inch diameter magnifying glass in sunlight | 2 Watts (can be focused into 0.1 inch diameter dot) |

| Cell phone | 2 Watts |

| 1/2 cell phone power into body | 1 Watt |

| Person sunbathing (each square inch, facing sun) | 0.7 Watts |

| Person inside giant microwave oven (each square inch) | 0.35 Watts |

| 100 Watt light bulb on hand 40 inches away | 0.1 Watts |

| WiFi hotspot device at device | 0.1 Watts |

| 100 Watt light bulb on whole body 100 feet away | 0.008 Watts |

| Microwave oven leakage 2 inches away (/cm2) | 0.005 Watts |

| Laser pointer | 0.005 Watts (all focused into 0.1 inch diameter dot) |

| Cell tower on whole body 100 feet from cell tower antenna | 0.0008 Watts |

| WiFi hotspot on whole body 10 feet away | 0.0008 Watts |

| WiFi hotspot on whole body 30 feet away | 0.0001 Watts |

| Cell tower on whole body 300 feet from cell tower antenna | 0.0001 Watts |

| 100 Watt light bulb 100 feet away, per square inch | 0.000005 Watts (5 millionths of a Watt) |

| Cell tower 100 feet away, per square inch | 0.0000005 Watts |

| WiFi hotspot 10 feet away, per square inch | 0.0000005 Watts |

| WiFi hotspot 30 feet away, per square inch | 0.00000007 Watts (70 billionths of a Watt) |

| Cell tower 300 feet away, per square inch | 0.00000007 Watts (70 billionths of a Watt) |

| Cell tower intensity on whole body 1 mile away | 0.00000003 Watts |

| Cell tower intensity 1 mile away, per square inch | 0.00000000002 Watts (20 trillionths of a Watt) |

Click here for more illustrations of ionizing radiation, as compared to non-ionizing radiation.

Since even the fanatics have come to reckon with the fact that even 2 Watts of non-ionizing electromagnetic radiation (not focused into a small, 0.1 inch dot, of course) isn't really enough to burn flesh by heating it, the next theory is about non-thermal effects.

The obvious non-thermal effect is of the inducing of electrical currents or magnetic flux inside the human body, a valid inquiry, since the human body consists of electro-chemical processes. There is just, scientifically, nothing else a non-ionizing (i.e. excluding UV, X-rays, gamma radiation, etc.) electromagnetic field can do.

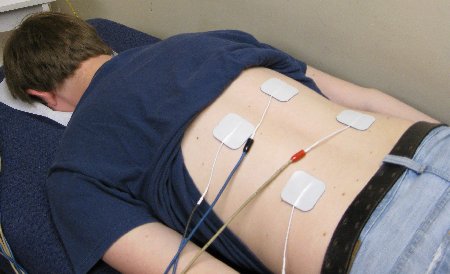

Since the human body contains electrically conductive tissues, currents must be induced. That is just a matter of plain physics. But a bit of common sense should prevail here, too. If the electrical current is high enough, muscles can twitch, like Galvani's famous experiment in 1780 with dead frog legs. When I had a back problem, before I understood about the promise of divine health, I visited a chiropractor regularly, and he used interferential muscle therapy, as most chiropractors do, which felt good, was relaxing, and reduced pain and muscle spasm. I eventually bought a battery operated device to use on myself at home. So, here I subjected my flesh to intentional electrical currents from a source of perhaps up to 40-80 Volts (although in reality somewhat less, because of the conductive gel on the electrodes reducing the electrical skin resistance). I turned up the voltage level to as high as I could take without it turning painful. Here is a photograph of someone in a chiropractor's office getting this therapy:

So, now we must ask this question: Why does the speculation out there focus on ill-effects, if there is an effect? If we don't have a grasp on the science behind any supposedly measurable effects on human tissue, if there is even perhaps an effect, then why isn't there equal speculation that perhaps cellphone radiation might actually be good for you? Interferential muscle therapy, which purposely injects electrical currents into muscles, can relax muscles that are in spasm. Now they sell them also as a gimmick for passively developing muscle strength, since the electrical currents stimulate the muscle fibers to contract and relax.

There is no way that a WiFi device could induce such currents in any significant fashion inside the body to have any such profound effect that you could not feel. There isn't even 40-80 volts of anything anywhere inside a WiFi device or cell phone to supply to the transmitting antenna, let alone the inefficiency of converting this to an electromagnetic field and then picking it up at a distance! And then, given the previous calculation of the 10 square foot man, 10 feet from the WiFi access point, at 0.0008 Watts, there is just hardly anything to induce an electrical current from, considering that the human body is not anything like what would be needed to make an efficient antenna, from an engineering perspective.

I can see the potential of a 2 Watt cell phone inducing some very small currents, while the phone is pressed to the body during an active call, currents so small that you cannot feel them such as to perceive any sensation of an electric shock, and that is electric current, not some spooky, voodoo force, but I will defer to Part II on that issue.

Again, although I am not a medical professional, it does not take more than a basic understanding of the chemistry/physics involved here, an understanding that you may remember from your high school chemistry and physics classes and textbooks. The danger in ionizing radiation (ultraviolet radiation and higher frequencies) is that the energy is high enough and the wavelength short enough to disrupt chemical bonds by knocking valence electrons off at a molecular scale, creating free radicals that would be damaging to the living tissue and living organism. But microwave wavelengths are on the order of about 10,000,000,000 longer than the lengths of chemical bonds (about 5 inches long compared to less than a billionth (0.000000001) of an inch). By illustration, visualize a wave coming in from the sea or from a lake to the shore. On that wave rides a bit of seaweed, a leaf, maybe even an insect or a seed floating on the wave. But the wavelength (the wave's length) is too long to break apart the bit of seaweed, the leaf, the insect, or the seed. The seaweed, the leaf, the insect, or the seed just rides the wave unharmed, because it is so much smaller than the length of the wave. Again I will repeat the illustration of getting dirt off of a dirty floor mat. If you take it outside and hold it vertically, only dirt that was not stuck (i.e. bonded) to it will drop off the surface. Next, if you just wave it back and forth slowly (low frequency), you will not eject the dirt stuck in it. But if you vigorously shake it fast enough (high frequency), then this will knock the dirt off of it. This principle is similar to that of ultrasonic cleaners, where you put the dirty jewelry or other object in water, and the sound waves shake the dirt off. But you wouldn't be able to shake the dirt off by holding the object in the water and slowly waving it back and forth, even despite that your hand and arm are much more powerful in muscle strength than the ultrasound transducer in the machine, strong enough that you could easily destroy that ultrasonic transducer diaphragm by pressing on it with your thumb.

Then, after all the discussion of electrical effects, there is the question about the magnetic field. But that is an even more elusive issue. The human body has no metal or other magnetic material in it. People routinely are exposed to the massive, approximately 1.5 Tesla or greater magnetic fields of a medical MRI scanner and feel no effect of it at all. These magnetic fields are very powerful. Refer to the following photograph of a metal cart that got sucked into a MRI machine, or the video after that of some people experimenting with some steel objects and an office chair with a metal base being sucked into the MRI cavity, producing up to 2000 pounds of force:

Yes, that gauge read "2000 lbs" of force on that office chair. So, now we are concerned about the miniscule magnetic fields in a radio electromagnetic wave that are not powerful enough to move a paper clip?? I defer to Part II.

So, I think this article has covered all the possible effects:

In the U.S., there have been roughly 40,000 people brutally killed in automobiles each year, along with 200,000 serious disabling injuries, and 3,000,000 other injuries, for many decades (https://www.nhtsa.dot.gov/ and etc., 1986-2005 statistics). Since usually only one party in a multi-vehicle collision is at fault, that means that the other party is a victim through no fault of his own, making the peril unavoidable to even the most conscientious drivers. Statistically, for 1 out of 80 people, the final cause of death was automobile, and 1 out of 20 people have been disabled from serious injury. When it comes to the automobile, there is just nothing like it, complete with blood and guts, mangled bodies and automobiles, wreaking death and destruction all over on a daily basis, horrible, graphic images of blood and dismembered bodies that you can easily google on the internet. Everyone reading this knows of people killed or seriously injured, and hears about it in the news and drives by the accident scenes. Yet fearful, carnal people seem to just routinely jump into a car and drive it multiple times a day with little to no concern whatsoever, while they worry about other things, like 0.1 Watts of electromagnetic radiation from the nearest WiFi or 0.005 Watts leaking out of their microwave ovens. So, if you are going to think carnally, it doesn't even make sense to worry about electromagnetic phenomena if you are willing to drive or ride in an automobile.

The ultimate hypocrisy is with those who think nothing about driving the automobile while talking on the cell phone, which has been shown by multiple studies and trials to make a person statistically and effectively more impaired than a drunk driver (https://www.ncbi.nlm.nih.gov/pubmed/16884056 and other studies). So, we then have some "drunk drivers," so to speak, concerned about long term electromagnetic health effects, while behaving in a manner that is a threat to public health and safety!

Enough of the carnal thinking!

"...let them rule over the fish of the sea and over the birds of the sky and over the cattle and over all the earth, and over every creeping thing that creeps on the earth." (Gen 1:26, NASB'95)

"...but the earth he has given to the sons of men..." (Psalm 115:16, NASB'95)

"Behold, I have given you authority to tread on serpents and scorpions, and over all the power of the enemy, and nothing will injure you." (Luke 10:19, NASB'95)

"they will pick up serpents, and if they drink any deadly poison, it will not hurt them..." (Mark 16:18, NASB'95)

"With the fruit of a man's mouth his stomach will be satisfied; he will be satisfied with the product of his lips. Death and life are in the power of the tongue, and those who love it will eat its fruit." (Prov 18:20-21, NASB'95)

The last scripture is key to understanding the issue of human electromagnetic susceptibility. It is not a physical issue to us at all, but one of dominion and faith.

When Jesus walked across the Sea of Galilee in John 6, it was to get to the other side. One could come up with all kinds of other religous-sounding explanations for it, but the issue was simple: He was evading the people, who wanted to make him king by force (after he fed the 5000), he had already sent his disciples across in a boat, Jesus didn't himself have another boat, he needed to get to his disciples, and if he had walked around the Sea of Galilee, the people would have found him and continued with their agenda, so he walked across the Sea of Galilee after dark, to meet up with his disciples. He was about his business, and the water was not going to be a hindrance to it. Isn't that simple?

If you evaluate it from a natural point of view, Jesus was going to drown and die, plain and simple. The perils to his health by water are frightening in the natural.

But we have "Christ in us, the expectation of glory" (Col 1:27). "...it is no longer I who live, but Christ lives in me; and the life which I now live in the flesh I live by faith in the Son of God..." (Gal 2:20).

We proclaim healing and health in any situation, and by our faith it comes to pass, if we truly believe it. By that same principle, we can proclaim sickness and ill-health in any situation, and by our faith it comes to pass, if we truly believe it.

From this you should begin to see how people are actually getting sick by electromagnetic phenomena. The very fear and faith in the peril of it all is enough to become a self-fulfilled prophecy. Whether there are physical, biological processes going on is a moot point; the faith in the infirmity is enough to be the root cause of it, and can drive the infirmity to its physical manifestation.

It is easy to see then, how this can even apply to "scientific" studies. One can even put a "curse," so to speak, on the results, and the results can be expected to line up with the "curse" invoked. The spiritual therefore takes precedence over the physical manifestation, and drives the physical results. Others buying into the results convince themselves that they are likewise susceptible, and then they run with the curse and become verifiably sick themselves.

As a result, those who have faith for electromagnetic radiation producing harmful effects will reap harmful effects. Those who have faith in God's protection will be immune to such effects. If you convince a person that they will become ill as a result of radio frequency electromagnetic radiation, then they certainly will become ill, because they were "convinced" of it, and that is the definition of faith.

Hebrews 11:1 defines faith:

"Faith/belief" (πιστις = "pistis")

"is" (εστιν = "estin")

"understanding/assumption" (υποστασις = "[h]upo-stasis")

"of-being-expected" (ελπιζομενων = "elpizomenon")

"conviction/proof" (ελεγχος = "elegchos")

"of-matters" (πραγματων = "pragmaton")

"not" (ου = "ou")

"being-observed" (βλεπομενων = "blepomenon")

If deleterious electromagnetic health effects are the "understanding/assumption" of what is "being expected," the "conviction/proof of matters not being-observed," then this defines "faith" for electromagnetic sickness. It is that simple.

Put in more general terms, if you believe that you will get sick, you will; if you believe that you are protected, then you are. This is not a "faith in faith" proposition. The assurance of health comes from the Word of God, based on the price Jesus paid for it in his own body, which gives us sure promises, so we ultimately have faith in God for it. Contrariwise, lack of assurance amounts to doubt and unbelief regarding the Word of God, which puts stake in human understanding and demonic deception, leaving one open to the elements of nature, the devil, and his works.

The only right strategy and way to view the issue, which as I said, makes all the natural explanations in Part I moot, is to be wholly about the business of proclaiming and demonstrating the gospel of the Kingdom of God, seeking to set free those captive and in bondage to fear, and the devil and his works, and making disciples of all kinds of people according to the commission that Jesus gave us. Put another way, the Christian is not afraid of the elusive electromagnetic perils and fears that are being circulated on the Internet for the purpose of evoking fear, because we do not belong to the kingdoms of this world, but to the Kingdom of God, we are about that business, and God will back us up in that regard through the necessary immunity and by the power of the Spirit to get our job done.

This is not to say that we flaunt immunity for show, or suppose that we should remove microwave oven door interlock switches from the design of microwave ovens, but that our concern is elsewhere, having to do with those desperately in need of the gospel, and we should be consumed with that, being liberators of others, rather than playing the victim role.

Those who claim to believe in divine health and healing but put stake in electromagnetic curses are not fooling either the devil or God. It is double-mindedness, plain and simple.

This whole article can be applied in an analogous way to hundreds of other modern-day perils having to do with food, nutrition, chemical pollutants, personal safety, and other things. In other words, it is not enough to be satisfied about only radio frequency electromagnetic radiation. The proposition must apply to every other part of our lives.

If we have faith (believe) that all these things can harm us, then they likely will because of our faith in them and the power of our words, but if we understand that we can live empowered by the Holy Spirit, as Jesus did, then we can put all of our physical, mental, and spiritual efforts into proclaiming the gospel and reaching those who are in bondage to the things of this world (including bondage to fear of electromagnetic radiation).

![]() I grant this work to the public domain.

I grant this work to the public domain.